DeepSeek v3 online – A game-changing model in AI language, featuring an impressive 671 billion total parameters, with 37 billion activated for each token. Built on cutting-edge Mixture-of-Experts (MoE) architecture, DeepSeek v3 sets new standards for performance, running across a wide range of benchmarks while ensuring highly efficient inference.

DeepSeek v3

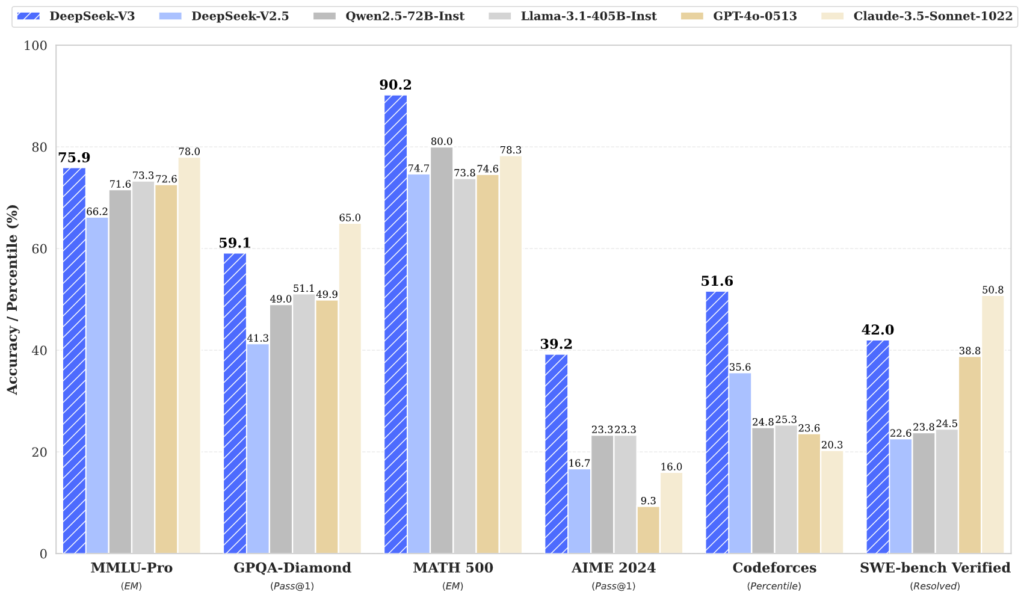

Discover the impressive capabilities of DeepSeek v3 across multiple domains – from code generation to complex questions!

Download DeepSeek V3 Models – Select between the base and chat-tuned versions of DeepSeek V3.

| Model | #Total Params | #Activated Params | Context Length | Download |

|---|---|---|---|---|

| DeepSeek-V3-Base | 671B | 37B | 128K | Hugging Face |

| DeepSeek-V3 | 671B | 37B | 128K | Hugging Face |

About DeepSeek V3

DeepSeek-V3. announced in December 2024, DeepSeek V3 is built using a mixture-of-experts architecture, able to manage a wide range of tasks. The model has 671 billion parameters with a context length of 128,000.